Spatial Information to Somatosensory Dimensional Information Soft Robotic Vest

Converting environment spatial data into tactile sensory information that projects to the patient body.

Besides neuroprosthetics, I also build a variety of equipment to help people with other disabilities. The most important thing I've learned from my journey is believing that any problem can be solved with effort. It is just a matter of time, breaking down complex problems into small pieces. I have focused on building human enhancement and rehabilitation robots in college. This semester, I am working at NYU Langone Neuroimaging laboratory on an ARkit project that helps blind people. I have no prior experience with Xcode and swift. It is tough to build a new app from scratch. So I break down the project into three parts: find an object, find the object's center, then measure the distance from the user to the center of the object. Each step is built upon the previous one and makes things much easier. Recently, I have proposed and designed a new device that reacts to the distance information from the iPhone, which will help blind people distinguish the surrounding environment. It is a matrix of soft robotics that changes space information from Lidar to tactile input for the human body.

Space Information to Somatosensory Dimension Soft Robotic Vest

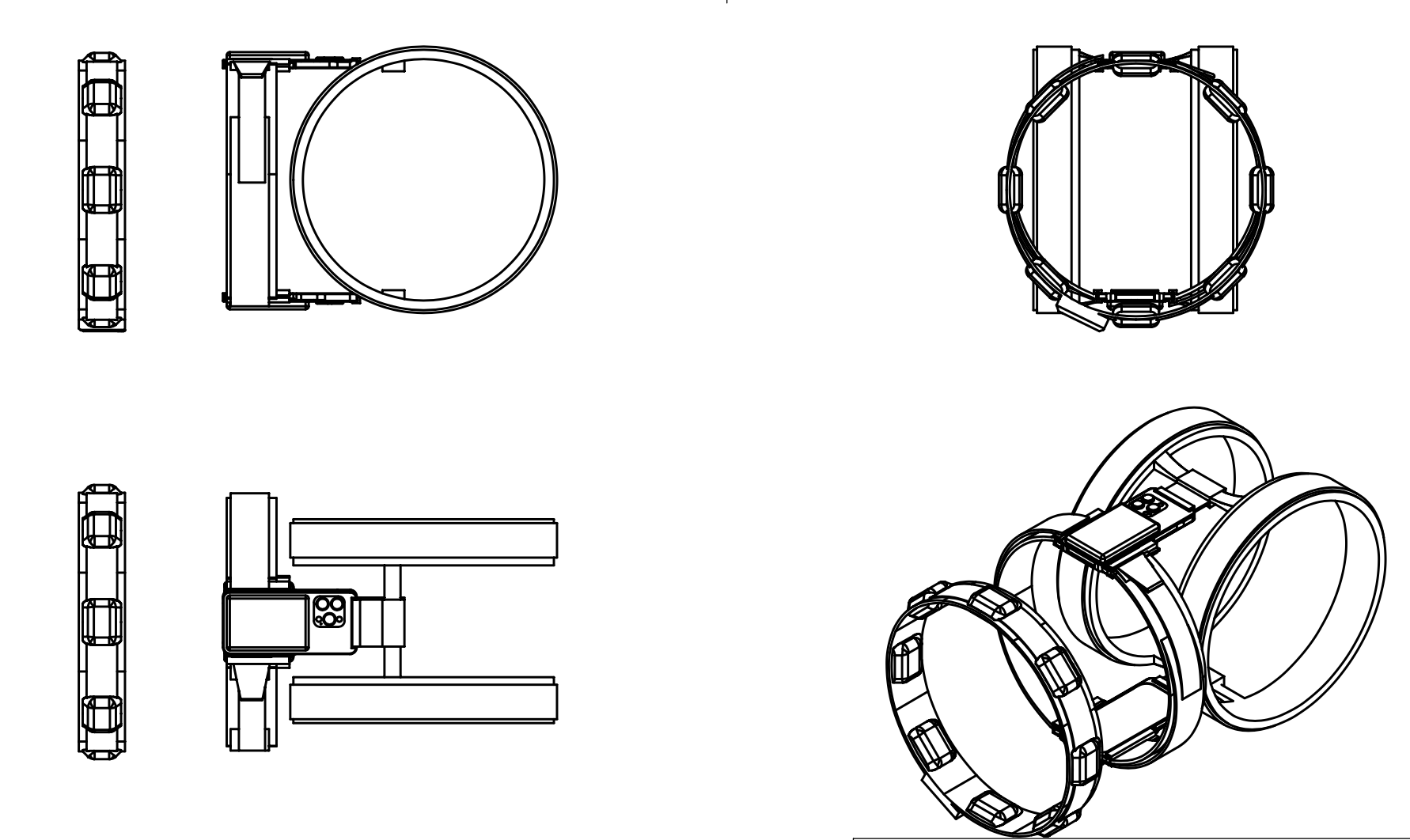

Using iPhone Lidar to collect the spatial information, then communicate to the belt controlled by Arduino through Bluetooth.

Space Information to Somatosensory Dimension Soft Robotic Vest

Originally using a matrix of vibration motors to demonstrate the distance and spatial information, it is very hard to distinguish spatial information through vibration, so this vibration belt is composed of 8 separate soft robotics. The level of air pressure would show the user how close they are to the surrounding object.